Regulation essential to curb AI for surveillance, disinformation: rights experts

[ad_1]

![]()

In a statement on Friday, the experts said that emerging technologies, including artificial intelligence-based biometric surveillance systems, are increasingly being used “in sensitive contexts”, without individuals’ knowledge or consent

‘Urgent red lines’ must be drawn

“Urgent and strict regulatory red lines are needed for technologies that claim to perform emotion or gender recognition,” said the experts, who include Fionnuala Ní Aoláin, Special Rapporteur on the promotion and protection of human rights while countering terrorism.

The Human Rights Council-appointed experts condemned the already “alarming” use and impacts of spyware and surveillance technologies on the work of human rights defenders and journalists, “often under the guise of national security and counter-terrorism measures”.

They also called for regulation to address the lightning-fast development of generative AI that’s enabling mass production of fake online content which spreads disinformation and hate speech.

Real world consequences

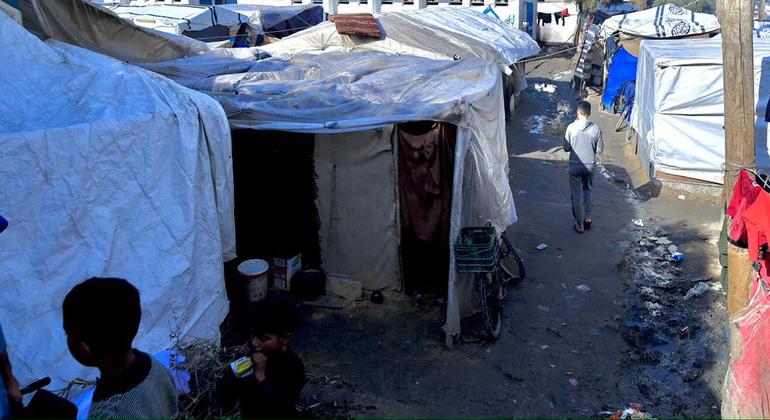

The experts stressed the need to ensure that these systems do not further expose people and communities to human rights violations, including through the expansion and abuse of invasive surveillance practices that infringe on the right to privacy, facilitate the commission of gross human rights violations, including enforced disappearances, and discrimination.

They also expressed concern about respect for freedoms of expression, thought, peaceful protest, and for access to essential economic, social and cultural rights, and humanitarian services.

“Specific technologies and applications should be avoided altogether where the regulation of human rights complaints is not possible,” the experts said.

The experts also expressed concern that generative AI development is driven by a small group of powerful actors, including businesses and investors, without adequate requirements for conducting human rights due diligence or consultation with affected individuals and communities.

And the crucial job of internal regulation through content moderation, is often performed by individuals in situations of labour exploitation, the independent experts noted.

More transparency

“Regulation is urgently needed to ensure transparency, alert people when they encounter synthetic media, and inform the public about the training data and models used,” the experts said.

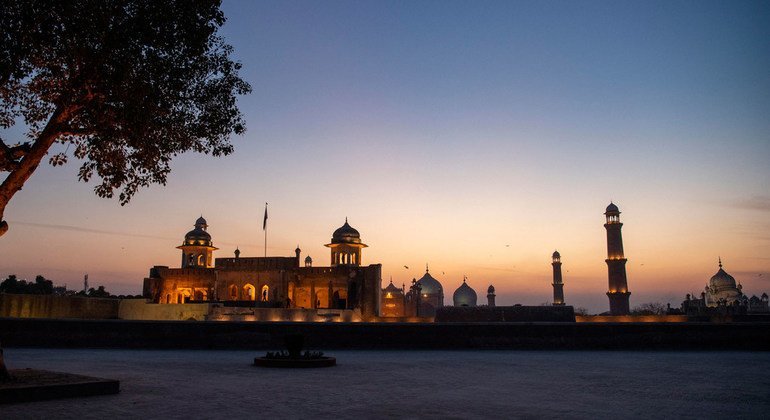

The experts reiterated their calls for caution about digital technology use in the context of humanitarian crises, from large-scale data collection – including the collection of highly sensitive biometric data – to the use of advanced targeted surveillance technologies.

“We urge restraint in the use of such measures until the broader human rights implications are fully understood and robust data protection safeguards are in place,” they said.

Encryption, privacy paramount

They underlined the need to ensure technical solutions – including strong end-to-end encryption and unfettered access to virtual private networks – and secure and protect digital communications.

“Both industry and States must be held accountable, including for their economic, social, environmental, and human rights impacts,” they said. “The next generation of technologies must not reproduce or reinforce systems of exclusion, discrimination and patterns of oppression.”

Special Rapporteurs and other rights experts are all appointed by the UN Human Rights Council, are mandated to monitor and report on specific thematic issues or country situations, are not UN staff and do not receive a salary for their work.

[ad_2]

Source link